# Quotes and discourse on AI

Quotes and discourse on AI that I found interesting. There is so much discussion going on around AI that it is difficult to keep up.

Personally, I'm trying to keep an open mind about it and not lean too much towards either side. Instead, I'm keeping an eye on how things are playing out.

Please read my disclaimer on quotes.

# 10 March 2025

(Jack Clark is one of Anthropic's cofounders)

What’s one underrated big idea? People underrate how significant and fast-moving AI progress is. We have this notion that in late 2026, or early 2027, powerful AI systems will be built that will have intellectual capabilities that match or exceed Nobel Prize winners. They’ll have the ability to navigate all of the interfaces… they will have the ability to autonomously reason over kind of complex tasks for extended periods. They’ll also have the ability to interface with the physical world by operating drones or robots. Massive, powerful things are beginning to come into view, and we’re all underrating how significant that will be.

# 25 March 2025

And with regards to AI taking jobs - it obviously will become a serious problem in future. But being a doomer right now is like lying down in a parking lot waiting to get run over - you're surrendering to a pointless outcome while the rest of the world keeps moving. There's still so much to build and accomplish.

# 9 April 2025

An interview (21 March 2025) (opens new window) with a guy who "makes $750 a day using 'Vibe Coding.'"

Tweet (7 April 2025) (opens new window) by Tobi Lutke, the current CEO of Shopify:

Reflexive AI usage is now a baseline expectation at Shopify

Using AI effectively is now a fundamental expectation of everyone at Shopify. It's a tool of all trades today, and will only grow in importance.

We will add AI usage questions to our performance and peer review questionnaire.

Probably the most striking part:

Before asking for more Headcount and resources, teams must demonstrate why they cannot get what they want done using AI.

# 16 April 2025

- Understanding Deep Learning (12 November 2024) (opens new window) by Simon J.D. Prince:

We should consider that capitalism primarily drives the development of AI and that legal advances and deployment for social good are likely to lag significantly behind. We should reflect on whether it’s possible, as scientists and engineers, to control progress in this field and to reduce the potential for harm. We should consider what kind of organizations we are prepared to work for. How serious are they in their commitment to reducing the potential harms of AI?

# 19 June 2025

I went to an AI talk last night. The speaker noted the large energy usage of the data centres that power the AI applications we use today. Someone in the audience suggested that the negative effects of AI might be offset by the positive applications, including scientific advancements, that might be discovered as a result of modern AI.

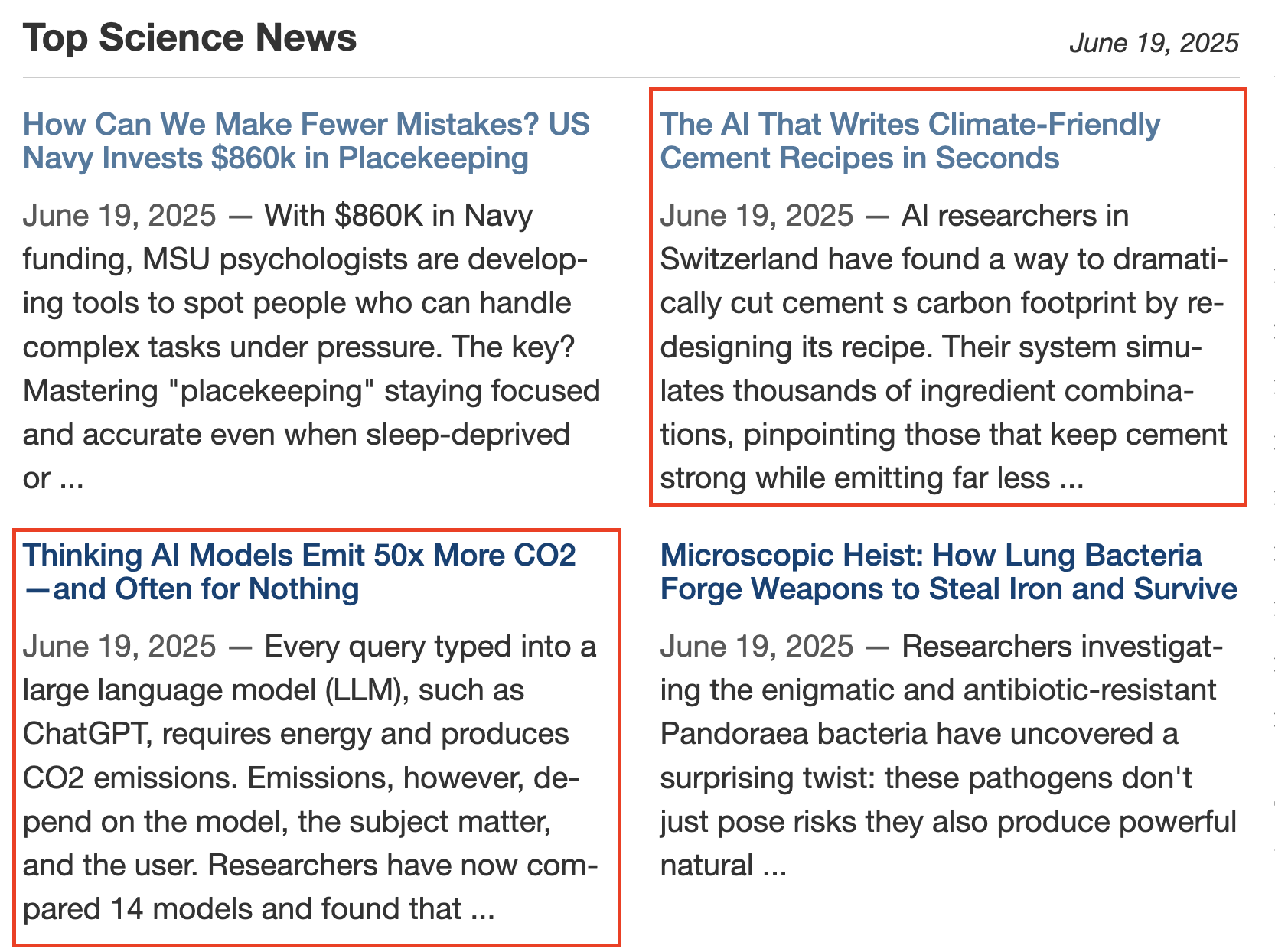

Then, today, I saw these two articles on ScienceDaily (opens new window) and thought it was a good illustration of the dichotomy of the good and bad potential effects of AI:

(Thinking AI models emit 50x more CO2—and often for nothing (opens new window) and The AI that writes climate-friendly cement recipes in seconds (opens new window) in case you're interested in the articles themselves.)

# 15 July 2025

The sound of inevitability (opens new window) (titled "LLM Inevitabilism" on HackerNews):

These are some big names in the tech world, all framing the conversation in a very specific way. Rather than “is this the future you want?”, the question is instead “how will you adapt to this inevitable future?”. Note also the threatening tone present, a healthy psychological undercurrent encouraging you to go with the flow, because you’d otherwise be messing with scary powers way beyond your understanding.

I've been encountering more and more AI-sceptic articles and I tend to agree with them. My viewpoint has evolved from a reluctance acceptance of the narrative pushed by Big Tech to a more hopeful one of personal agency.

# 12 August 2025

GPT-5 is a joke. Will it matter? (opens new window)

I would quote the entire article, but for pragmatism's sake, here's one quote:

It's clear that there is a cohort of boosters, influencers, and backers who will promote OpenAI’s products no matter the reality on the ground. It’s clearer than ever that, like so many well-capitalized tech ventures, OpenAI’s aim is simply to create a product that is either addictive to users to maximize engagement or to dully automate a set of work tasks, or both.

Thank you for reading my blog! If you enjoyed this post, you're welcome to subscribe via RSS here (opens new window) (I can recommend NetNewsWire on iOS).